Imagine this: you’re coding your autonomous one day. At the end of the day, it works fine, but it’s incomplete. One of you coding people says that he will take the code home and finish it. At your next meeting, you run the updated autonomous and nothing works; not even the stuff that worked last time you met. How do you fix this without reverting back to the old version?

The answer is simple: debugging.

What is debugging? Say you have a function that places a ring on a peg for the Ring it Up! game. After your coding person got his hands on the autonomous, for whatever reason the ring doesn’t get placed. The code looks like this:

// ex.c

void place_ring() {

do_stuff_so_the_ring_is_placed();

}

Debugging won’t magically make your program work; it won’t get the ring on the peg. All debugging does is tell you what the robot is doing. You can use debugging tools to see if a function is executing, or you can use it to see what in a program is going wrong. The debugging tool might be something visual, like text or a light, but it can also be auditory, like a noise. Here are some examples of debugging tools:

LEDs attached to your robot that get turned on through software are one debugging tool.

// LED_ex.c

void place_ring() {

do_stuff_so_the_ring_is_placed();

if (executed) {

turn_on_LED();

}

}

This works very well, but you need to spend quite a bit of money for it. You would need a prototype board, which is $49.95 from HiTechnic, and LEDs, which are $3.49 per at Radio Shack (assuming you want all the colors). You’d also need to wire it, which is a lot of work for simple debugging. Although use of LEDs in this way has their place, it is not the most effective form of debugging.

The NXT comes shipped with several noises on it, and the kind people at RobotC have made them available for the debugging process.

// sound_ex.c

void place_ring() {

clearSounds();

do_stuff_so_the_ring_is_placed();

if (executed) {

PlaySound(soundBeepBeep);

}

}

A better explanation as to how to use the sound functions is given by the people at RobotC. This way is very good; you need to spend extra money, since you already have an NXT, and all you really have to do in software is add a couple extra lines of code. There are drawbacks, though. When we’re running autonomouses, we’re sharing a room with two or three other teams, and it becomes hard to hear the NXT making the noises.

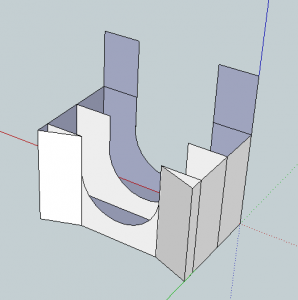

The NXT comes with an LCD display that RobotC gives functionality to. It has the advantage over other debugging devices in that it allows you to speak plain English.

// NXT_LCD_ex.c

void identify_IR_column() {

clearDisplay();

switch(column) {

case LEFT:

column_is(LEFT);

nxtDisplayCenteredTextLine(1, "on the Left");

case MIDDLE:

column_is(MIDDLE);

nxtDisplayCenteredTextLine(1, "in the Middle");

case RIGHT:

column_is(RIGHT);

nstDisplayCenteredTextLine(1, "on the Right");

}

}

We used this method for a program that we ran that told us what data our IR sensors were reading. We ran into problems where we had to duck to see the screen of our NXT, but we were later told that there are ways of viewing the NXT screen from a computer that is plugged in.

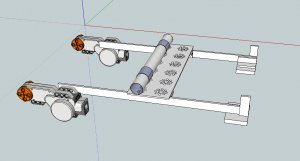

The method of debugging that the people who make RobotC recommend is through the debug screen. The debug stream is a window in the RobotC interface that will display text given to it with a special function. It is depicted on the right.

It can be used like this:

// debug_stream_ex.c

void place_ring() {

do_stuff_so_the_ring_is_placed();

if (executed) {

writeDebugStreamLine("It worked");

}

}

The last method we’ll share is debugging through the preprocessor.

Before we get to the debug method, the preprocessor must be explained. In C-related languages (C, C++, Java, RobotC), most lines that begin with a pound sign (#) are preprocessor lines. When you run a program, most of the time things in your program will only be evaluated when your program is running. A preprocessor directive will be evaluated when your program is compiled. Take this example:

// preprocessor_ex.c #include "Autonomous_Base.h" //http://bit.ly/ZCW5Fg IRmax_sig(ir_sensor);

When the program is run, the C compiler will fetch Autonomous_Base.h and make it so you can use functions from it. When the program is later run, it will evaluate IRmax_sig(), a function from Autonomous_Base.h.

There are other preprocessor directives, as well. You might have seen #pragma before. For purposes of debugging, we’re going to use #ifndef.

// debugging_with_preprocessor_ex.c

void get_the_ring(bool debug_mode) {

get_ring();

if (debug_mode) {

SOME_PREVIOUS_DEBUG_METHOD();

}

}

#ifndef _debug

task main() {

get_the_ring(false);

}

#else

task main() {

get_the_ring(true);

}

A detailed explanation of the preprocessor is available here. If it seems confusing, that’s because it is.

This debug mechanism is used in combination with others. You have with your functions a bool type for debug mode (shown above) and you say #ifndef _debug, which translates to “if debug is not defined,” and you put below it a main task for when debug mode is off. You have after the task an #else, which operates like the standard else. You have then a different main task that has all the debug mode bools set to true.

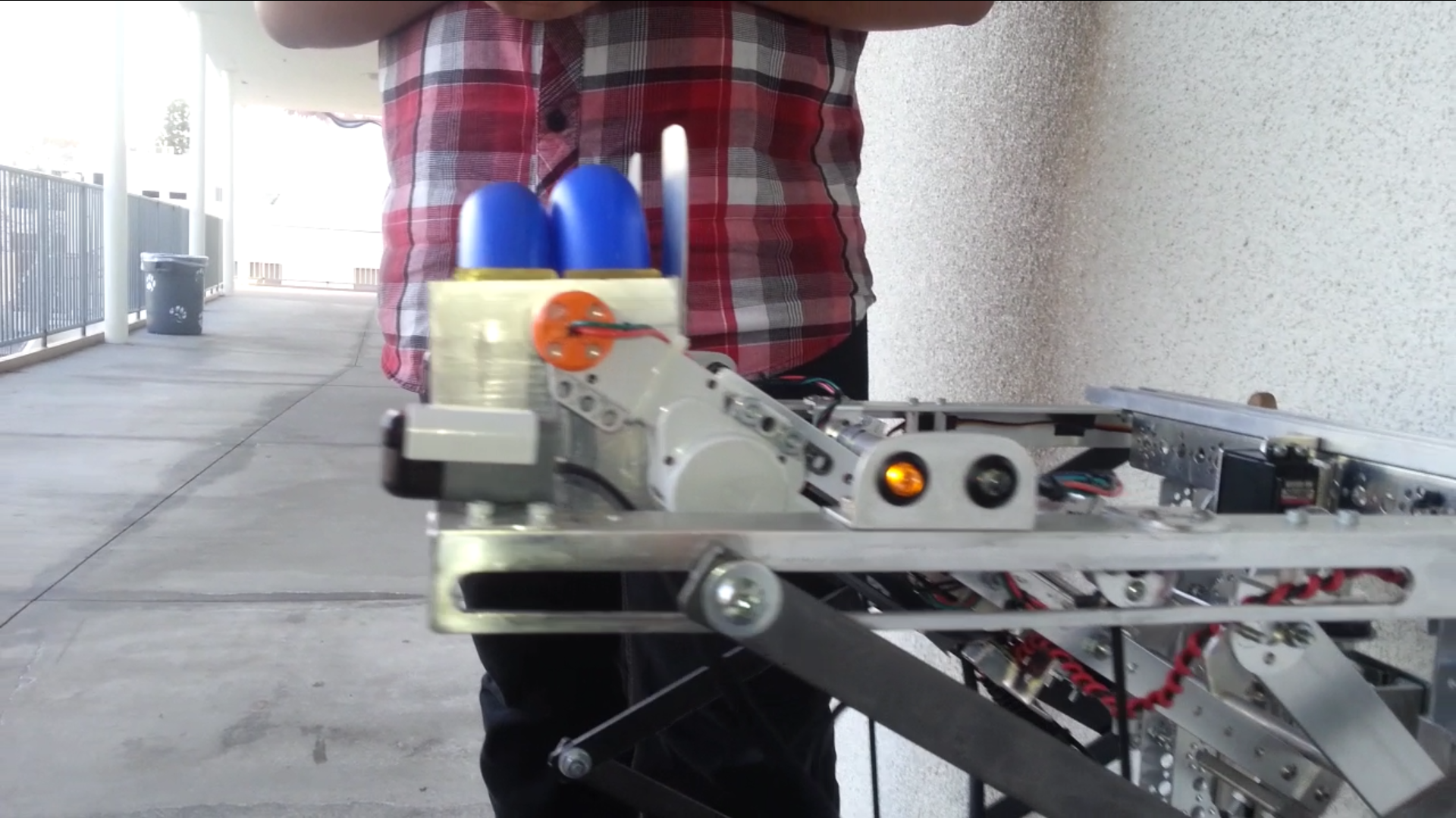

But when is debug mode defined? After you hit F5 to send a compiled copy of your code to your robot, a window opens, much like this:

_debug is defined.Pressing start will define _debug for you. This isn’t our favorite method, but it does have it’s place.

We hope that you have found this tutorial on debugging helpful and that coding your autonomouses goes smoother.

If you’d like further help debugging, here are a couple links: